I ignored the new hotel loyalty satisfaction survey from JD Power & Associates when it came out last week, but it seems to be getting a lot of play now, so let’s go over why it does not tell you anything useful.

This isn’t simply one of those “these things are subjective” or “it depends on whom you ask” kinds of criticisms.

The survey is just fundamentally silly. That begins with the factors that go into their ranking:

- account maintenance/management (23%)

- ease of redeeming points/miles (22%)

- ease of earning points/miles (18%)

- reward program terms (16%)

- variety of benefits (16%)

- customer service (5%)

The most heavily-weighted factor in the rankings — nearly a quarter of it — was ‘account maintenance’. That just doesn’t strike me as the single biggest factor in how valuable a hotel loyalty program is. Note that this is separate from “customer service” which is its own category though worth just 5% of the survey’s weight.

This survey is about earn and redeem only, not about how well a program treats you (elite benefits) and strangely for something focusing only on redemptions it cares about ease of redemption but not the value of what you’re redeeming for.

Meanwhile they draw such strong conclusions from a pretty small sample. They surveyed about 2900 people, down over 20% from last year’s sample size. And that lets them conclude that Delta Privilege and Hilton HHonors are the best. We don’t have the underlying data set, but how many of those 2900 could have been familiar with Delta Privilege?

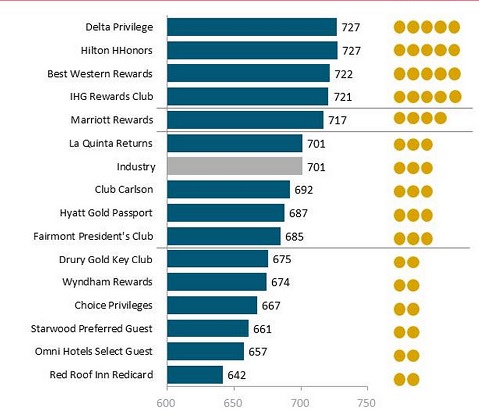

Here’s their bottom line:

La Quinta Returns is judged a better program than Starwood’s, Hyatt’s, and Fairmont’s. The results themselves can be considered self-negating.

Also worth noting and generally not mentioned by media covering these awards is that JD Power sells its research to the subjects of its award rankings and sells companies the right to market their winning of these awards.

I never participate in their surveys. I think it’s just rubbish they sell as though it means anything.

I took the JD Power credit card survey. I thought the questions were just stupid and didn’t accurately help to gauge user’s sentiment about their card and rewards.

i think we all know JD power ranks according to who pays… i actually consider their award an indicator of a bad product by a company that bribes.

I noted on another blog that Delta privileges was rated highest for ease of redeeming points. Since they don’t actually have any points to redeem, this would seem to make the results rather suspect!

For the budget traveler, LaQuinta Returns is a great loyalty program. Good service, no surprises, misrepresentations, sneaky maneuvers or devaluations, very customer orientated and straight forward.

Granted there are no high end, (or barely mid range) properties but for what it is, I can see why it scored high on the JD Power survey.

@Steve T — You make too much sense. The travel bloggers are so buried deep within their little blogosphere echo chamber, reinforcing one another’s — often erroneous — perceptions, that they get shocked when they see some of the choices that real people out there make regarding their preferred loyalty programs.

Like most surveys, the JD Power loyalty schtick does have its issues, but the company has built a decent reputation for “surveys of customer satisfaction, product quality, and buyer behavior for industries ranging from cars to marketing and advertising firm.” Therefore, they cannot be dismissed outright as one would a lesser entity. So, people are talking about this survey, which, in fact, qualitatively agrees with my own perceptions (for example, I rate Hilton Honors much higher than the bloggers because I consider it to be a much more “mature” program). However, unless a survey places Hyatt or SPG at the top, its rating of hotel loyalty programs would not be considered credible by the loyalty/travel bloggers. I have expounded about why the placing of Hyatt GP — a loyalty program that is, at best, a work-in-progress — on a pedestal is misguided, or that SPG is one of the least attractive programs because their awards are too expensive in relation to one’s ability to earn Startpoints, but this has simply been ignored in favor of the conventional travel blogosphere “wisdom”. IHG has been one of the hottest tickets of late, but the bloggers have been absolutely brutal in tearing them down. However, notice that real folks out there do seem to like IHG just fine…

I think that some of these travel bloggers need to get out there in the real world from time to time…;-)

Isn’t Delta Privilege a Canadian only program? I don’t ever remember seeing locations outside of Canada.

I don’t agree with @DCS. Sure bloggers love SPG and Hyatt but Hilton Is your favorite? I hardly ever stay with SPG or Hyatt because they don’t have locations where my business travels take me usually but I love SPG points. They are about the most valuable to me. Hilton is so devalued they make Delta skypesos seem like gold. Can we coin the term HHrubles yet? Sure Hilton has nice properties buy I avoid them like the plague as the HHRUBLES are so worthless to me. Sure they give out bonuses and you can get points through the credit card but you need millions of HHRUBLES to get enough free nights for a vacation.

JD Powers works for the vendors not the consumer. They seem to operate like the Honor Student bumper sticker committee at your neighbors middle school Only they get paid by the parents in this case. Maybe Underwriters Laboratories can come out with a hotel loyalty survey 🙂

@DaninMCI — Yeah, Hilton is my favorite. If you’ve got a problem with that, then tell me as objectively as you can why HHonors should not be my favorite. But before you launch into the “conventional wisdom” about how SPG points are “valuable” and Hilton points are “devalued” to the point of being useless (HHRUBLES. LOL), you might want to take a look at the objective evidence here:

https://milepoint.com/forums/threads/exploring-spg-point-values-by-hotel-category.114263/#post-2551672

where I did the math so that you would not have to (you’ll love the glossy charts too). The gist of what you will find at the link that I just provided was, in fact, independently corroborated and crisply summarized in at least this one travel blog that also did the math:

“…Hyatt, Hilton, and Marriott all have award charts that are similarly priced. The fact that Hilton may sometimes charge up to 95,000 points for an award night is compensated for [by] the fact that it can offer 15 points per dollar, while Hyatt offers only 5 points per dollar. Starwood, however, has some incredibly high-priced awards among its top tiers, while IHG Rewards and Club Carlson may offer significant value even after Club Carlson’s recent devaluation.” [link: http://travelcodex.com/2014/03/much-cost-earn-free-night/ ].

The results of the modeling are not what “conventional wisdom”, which seems to be the basis of your comments, would say but the evidence is solid. After you have absorbed it all, and you still want to know why I favor HHonors, please ask specific questions and we’ll have a debate. The purported Hilton “devaluation” simply won’t do.

I won’t get into your beef with JD Power…

G’day 😉

I tend to trust Gary more than the survey on this one, considering he’s writing for his readers, who are an atypical group who are avid consumers of points and miles, while the survey presumably looks for a cross section of program users at large. I’m more interested in what others in this niche are thinking, since their views are somewhat more likely to be directly applicable to me.

I read Gary Leff’s critique of the recent J.D. Power hotel loyalty study with particular interest, as I lead the Travel Practice at J.D. Power, and oversaw the study. I can say with sincerity that I appreciated Mr. Leff taking the effort to comment since his observations quite possibly reflect the responses of others who do not have the benefit of a deeper explanation. When we do these press releases, we have a very short amount of space to provide what we believe to be the study’s key findings, and limit the details of the methodology to a few hundred words.

First, it is important to state what the hotel loyalty study represents. It is a study of loyalty program member satisfaction rather than an evaluation of the broader marketplace of loyalty programs, such as the study conducted by US News and World Report. The US News and World Report rankings exclude customer satisfaction feedback, while our study only considers customer feedback. Using a comparison, if someone conducted a satisfaction study of local radio stations and found the local Opera station was highest rated, all it would represent are the opinions of the station’s own listeners. It wouldn’t mean that the Opera station was the best or most appealing for the broad marketplace. Nor would it mean that everyone should start playing Opera music on their stations. Similarly, Delta Privilege rated best among its US based members, who are guests who travel to Canada and stay at Delta Hotels. If you aren’t part of Delta’s customer base, you likely won’t find their program appealing. It is worth mentioning they ranked third last year in the same study, just a few index points from the top spot, so the results year over year are quite consistent. It’s an important story for the industry as it shows that small chains can create programs that drive loyalty among their own customers despite the lack of distribution capability possessed by larger chains.

Mr. Leff also mentioned the sample sizes in the study. While the sample sizes used in the loyalty program study are smaller than those we use in our larger syndicated studies, we are confident that they are representative of the comparative marketplace. Consider that national opinion polls use samples of 500 to 1000 to reflect the opinions of 250 million people. Another important point is that all respondents were selected using the same methodology so there is no reason to think that any particular loyalty program had an advantage or disadvantage as a result of the sampling procedures.

J.D. Power uses statistical regression models to determine the weight given to the various factors in compiling our satisfaction index. The weights reflect the unique variance in satisfaction ratings explained by the individual contributing areas. In other words, the factors represent the ‘importance weights’ for discriminating one program from another based on overall satisfaction ratings.

Any market research study can be fairly critiqued. It’s just important to be clear on the details behind the study. None of these studies are perfect but I think they serve a good purpose in that they sometimes defy conventional belief. For example, everyone likely has an idea of which loyalty programs are the ‘best’. Perhaps customer satisfaction research challenges these beliefs a bit, just as it does when people argue that small chains can’t truly have effective loyalty programs.

Due respect but my criticism was about the specific components going into your analysis — the relative weight to meaningless items.

Regarding sample size, you haven’t answered the question of how many participants in your small sample had any awareness of the relatively obscure programs that you’re ranking?

While we’re asking questions, how many programs is the average respondent a member of? How frequently do they travel / how many hotel nights per year do they consume?

Even if you separate ‘satisfaction’ from ‘quality’ (suggesting people are happy with mediocre offerings perhaps) the things you’re asking and weighting, and the people you’re deriving that information from, simply do not get you an answer to that question.

Finally, I notice you do not deny that you sell your survey research to the companies included in your rankings and you license use of the name to the winner.

Let me take your questions/comments one by one.

As I mentioned previously, J.D. Power does not assign weights to the various components, but rather they are derived through statistical regression modeling. These models consider the unique variance that each component contributes to overall satisfaction with the loyalty programs. Put more simply, some components may seem more important to you than the ones to which the model ascribes greater weight, but they aren’t as discriminating for predicting satisfaction. Using a hotel example, we’d likely agree that hotel cleanliness is one of the most important aspects of hotel satisfaction. Yet, it tends to be less predictive of satisfaction than many other attributes because there isn’t a lot of variance between major branded hotel chains on cleanliness. So, if you want to drive your satisfaction ratings, you don’t focus on the things you and everyone else are already doing well, you focus on the areas which represent the greatest opportunity for differentiation, which are reflected in the derived weights.

To your second question, we don’t capture awareness of the various programs since it isn’t really relevant for what we are studying and reporting. The only thing we measure is satisfaction among those that belong to the programs they are being asked to rate. We aren’t measuring Hilton guests’ opinions of Delta Privilege, for example.

I had one of our team members run the numbers to answer your third question about whether people in this study belong to multiple programs. The respondents in our study belong to an average of 2.48 loyalty programs, which means they have some basis for comparative ranking, even if they are being asked to rate program satisfaction in isolation from comparisons to others. The panels we use tend to recruit from frequent traveler programs, so we have pretty good representation from seasoned travelers.

One other key to understanding the findings of the study—we allow the respondents to naturally fall out among status level. One of the reasons why Delta Privilege was highest ranked is because it is one of the easiest programs to reach elite status–but being able to achieve elite status quickly is a big part of why people like loyalty programs. Starwood, for example, had one of the lowest percentages of elites in the study. We don’t bias the sampling, but it indicates Starwood has a lot of people who sign up for the SPG program, but don’t engage with it very much. You can say this is a design flaw, but I’d argue program engagement is itself, an indicator of the appeal of a program.

Lastly, I always appreciate the opportunity to address the question of companies purchasing the research and licensing our name. One of the commenters intimated there is a ‘pay to play’ system going on in our studies. Given the nature of how these studies are conducted, there is no way to ‘game’ the results—there is no way to ‘stuff the ballot box’, nor determine rankings based on relationships with our company. J.D. Power is a global marketing information services company that created its reputation by conducting independent surveys of customer satisfaction, quality and buyer behavior. J.D. Power syndicated studies are not funded by the companies that are measured. Due to the self-funded nature of the studies, the data and studies are owned by J.D. Power. You can imagine the immediate blow to our credibility were these studies not conducted with the strictest of integrity and methodological rigor.

To recoup our investment in the research, industry players are able to subscribe to the research results. Companies that rank highest in our studies receive the J.D. Power award, regardless of whether they subscribe to the research or not. There is a very strict wall of separation between the research and licensing sides of our business. (Besides, one need not look any further than the current study to prove the top ranked are not always those that purchase J.D. Power services.) J.D. Power permits top-ranking companies to use the awards in advertising through licensing arrangements, which include a fee. The licensing fee pays for the administration of the program, which ensures that the award information being used in ads is accurate, consistent and protects consumers from any misrepresentation of the awards.

Again, I really do welcome the questions and scrutiny you provide. We ought to be able to credibly explain our work in any public forum. Space limitations prevent me from commenting further, but would be happy to address any further questions in a follow up call, or better yet would be happy to get together with you for drinks sometime if we ever travel to a mutually convenient location.

These defenses of the survey are sort of silly. You dumb down their meaning here in the comments. If you’re only measuring what you say you are measuring then the survey tells us nothing at all. Of course that’s not what your PR shop is pitching to the media, how it’s being portrayed.

It’s widely known that JD Power is pay to play, in a subtle way, if you don’t license the results you don’t keep repeating the survey.